Every day, organizations create a massive 2.5 quintillion bytes of data, making data management crucial for success. Data pipeline automation ensures data moves from various sources to useful insights without hitches.

Automated data pipelines improve information flow, which leads to better decision-making. This article will explore how data pipeline automation works, its advantages, and how to set up your own efficient pipeline.

Data pipeline automation is a way to move and process data from its source to a target location. It includes data ingestion, where raw data is gathered from different sources. Then, it goes through transformation stages to get ready for analysis.

This automation reduces manual data work and ensures smooth data flow across platforms like databases and APIs.

Automated data pipelines are key to efficient data analysis. They help businesses focus on getting insights from their data. This process improves data analysis quality and speeds up making decisions based on the latest data.

With automated data ingestion and processing, companies can use their data better. This keeps them competitive in a fast-changing market. Automation helps create a data-driven culture in companies. It supports quick responses to market shifts and customer needs.

Types of Automated Data Pipelines

Automated data pipelines are classified in different ways based on how they process data and their architecture. Knowing these types helps you choose the right one for your needs.

Batch vs. Real-Time Data Pipelines

Batch processing gathers data first and then processes it. It's great for looking at historical data. Real-time processing, however, works on data as it comes in. This is perfect for situations where quick insights and actions are needed. Each type has its own benefits for different situations.

On-Premises vs. Cloud-Based Data Pipelines

On-premises pipelines run on your own equipment, giving you full control and security. Cloud-based pipelines, though, are flexible and can grow easily. They also cut down on upkeep and costs. The choice depends on what your organization needs and can handle.

ETL (Extract, Transform, Load) vs. ELT (Extract, Load, Transform)

ETL takes data, changes it, and then puts it into a system. ELT loads data first and changes it later, giving you faster access to raw data for analysis. Think about how you like handling your data to pick the best method.

| Type | Processing Mode | Architecture | Primary Use Cases |

| Batch Processing | Scheduled intervals | On-Premises | Historical data analysis |

| Real-Time Processing | Immediate | Cloud-Based | Active response systems |

| ETL | Extract-Transform-Load | On-Premises or Cloud-Based | Data warehousing |

| ELT | Extract-Load-Transform | Cloud-Based | Big data analytics |

Why is Data Pipeline Automation Important

Manual data workflows are often slow and error-prone, leading to inefficiencies. Automating these processes increases data management efficiency, ensuring data moves smoothly and is ready when needed.

Studies reveal that up to 80% of data integration projects fail due to manual process complexities. These failures often result from data handling errors, wasting resources and missing opportunities. Automation reduces human error, easing these issues and letting organizations focus on strategic goals.

Automation handles large data volumes without delays caused by manual methods, which increases operational efficiency. This lets businesses focus more on innovation and growth, using their resources and time more effectively.

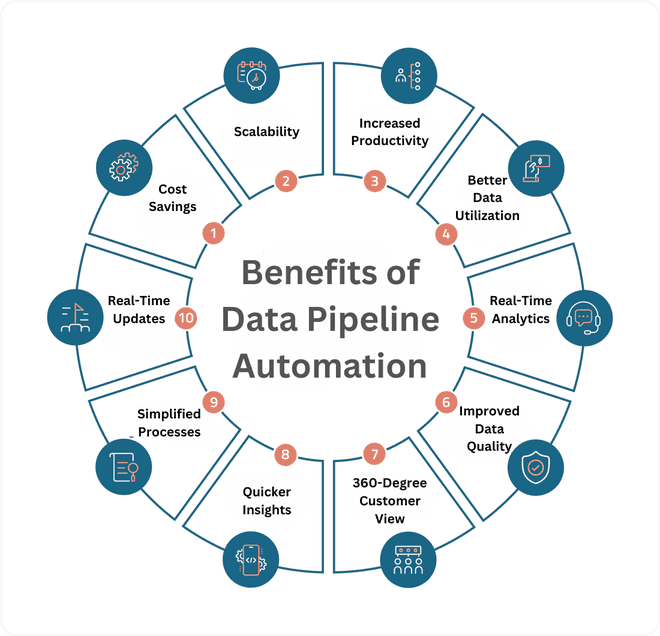

Benefits of Data Pipeline Automation

Understanding the benefits of data pipeline automation is key for businesses looking to improve their data handling. This method makes workflows smoother and increases productivity in many ways.

Improved Efficiency and Productivity

Automation reduces manual work, letting teams focus on more important tasks. This leads to faster and more efficient data processing, and projects are completed on time.

Better Data Utilization, Mobility, and Smooth Database Migration

Automation makes it easier to use and move data around. It helps with smooth database changes, keeping your data easy to access and use.

Real-Time Data Analytics

Getting data in real-time helps make quick decisions. This advantage allows companies to respond quickly to market changes and customer needs.

Improved Data Quality

Automation includes steps to keep data clean and correct. This leads to better data quality, which is key for accurate analysis and reports.

Creating a Comprehensive 360-degree Customer View

Automated pipelines collect data from various sources. This gives a full picture of customer behavior, helping with personalized services and better customer care.

Quicker and More Effective Insights

Automating data pipelines means faster processing and quicker insights. This speed helps you make confident, data-driven decisions.

Simplified Processes

Automation simplifies data handling. It reduces errors and speeds up extracting value from data, helping businesses use data more effectively.

Database Replication with Change Data Capture

Automation supports real-time database updates with change data capture, keeping data in sync across different platforms.

Cost Savings

Automated data pipelines can save a lot of money. They reduce the need for manual work and increase efficiency, leading to lower costs and better results.

Scalability

Automated data pipelines grow easily with your data needs without losing performance. This flexibility helps businesses keep up with changes and manage data well.

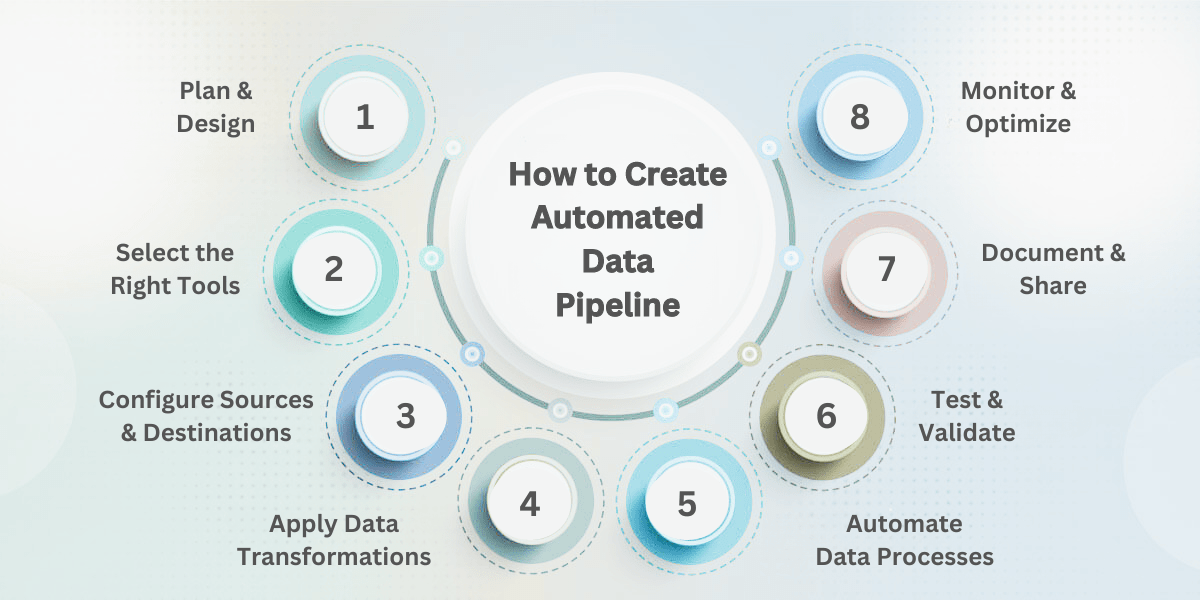

How to Create an Automated Data Pipeline

Creating an automated data pipeline is a step-by-step process. Let's go through the key steps.

Planning and Designing the Pipeline

First, plan carefully to set goals, identify data sources, and plan the data flow. Knowing what you need helps you plan your automated data pipeline well.

Selecting the Appropriate Tools and Technologies

Choosing the right tools is key for your data pipeline. You can choose from easy-to-use platforms or complex coding solutions. Consider your team's skills and the project's needs, too.

Configuring Data Sources and Destinations

Make sure connections are secure and follow the rules. To keep data safe and sound, map each data source to its destination.

Applying Data Transformations

Transforming data makes it more useful. Decide how to change or combine data for analysis to make sure the data is ready for use.

Automating Data Processes

Automation reduces mistakes. Use workflows and scripts to move and process data, ensuring it is updated on time and accurately.

Testing and Validating

Testing and validation check if your data pipeline works right. Test to find any problems affecting its performance or data quality before it goes live.

Documenting and Sharing Knowledge

Document all settings, workflows, and rules. This will help everyone work together better and make changes more easily in the future.

Ongoing Monitoring and Optimization

After it starts, monitor your data pipeline to see how it's doing and look for ways to improve it. Change settings and rules to keep it running smoothly and improve how it changes data.

Automating Real-Time Data Pipelines Using Kohezion

Kohezion is a powerful tool that automates these processes smoothly. It helps you quickly gather and process data, giving you insights for better decisions.

Kohezion's easy-to-use interface lets you create automated data pipelines easily. It works with many data sources and formats. This flexibility is vital for staying ahead in a changing market. With Kohezion's real-time analytics, you can quickly respond to trends and consumer behavior.

Using Kohezion changes how you handle and use data, making your workflow more agile and insightful. This technology lets you smoothly adapt to the future of data management.

Conclusion

Data pipeline automation is key for modern businesses to use their data fully. It makes processes smoother and cuts down on mistakes.

As data grows and becomes more complex, automation opens up new insights and makes operations more efficient, which helps a lot with making important decisions.

Knowing how automated data pipelines work and their benefits can really improve your data processes. This move helps you make better decisions and achieve better results. It sets you up for success in a world where data is everything.

Start building with a free account

Frequently Asked Questions

A data pipeline is the sequence of processes that move data from one system to another, often involving tasks like extraction, transformation, and loading (ETL). Data pipeline automation automates these tasks, reducing the need for manual intervention. Automation ensures data is consistently and accurately processed without constant monitoring.

Data pipeline automation reduces human errors and ensures consistency in data processing, which results in improved data quality. Automated pipelines can include validation steps to check for errors, inconsistencies, or missing data before the next stage. This leads to more accurate and reliable data for decision-making.

No, data pipeline automation can benefit companies of all sizes. While large companies handle vast amounts of data, small and medium-sized businesses can also gain from automation because it will save them time and resources. Automation helps any organization manage its data more efficiently, regardless of the volume.

Implementing data pipeline automation can present challenges, such as the initial setup cost, complexity in integrating with existing systems, and the need for skilled personnel to manage and maintain the automated processes. However, the long-term benefits of increased efficiency and reduced manual workload often outweigh these challenges.